TOC

This was my first time backing up and setting up a new MediaWiki (-vagrant) instance from scratch so I decided to document it in the hope that future me might find it useful.

We (teams at Wikimedia) often use MediaWiki-Vagrant instances on Labs, err, Cloud VPS to test and demonstrate our projects. It’s also pretty handy to be able to use it when one’s local dev environment is out of order (way more common than you’d think).

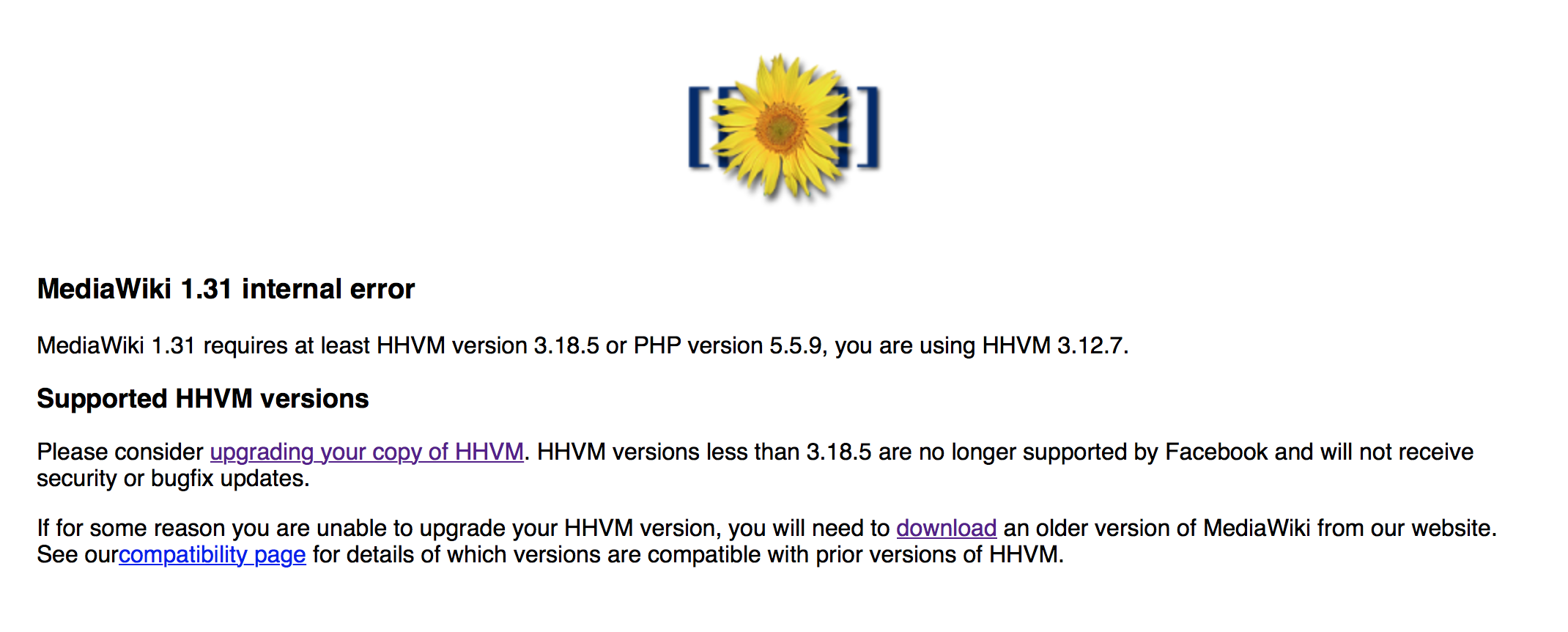

However, one day I woke up to…

…on our team instance (

…on our team instance (commtech-1.commtech.eqiad.wmflabs).

Okay, so I went in and tried to upgrade the HHVM package on the instance.

$ sudo apt-get install --only-upgrade hhvm

Reading package lists... Done

Building dependency tree

Reading state information... Done

hhvm is already the newest version.

0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded.

$ hhvm --version

HipHop VM 3.12.7 (rel)

Compiler: 3.12.7+dfsg-1+wmf1~trusty1

Repo schema: a28132f015144d07eea2af17c422c8d422b7111e

No luck.

bd808 recommended I look into spawning a new instance now that HHVM is dropping support for Trusty anyway.

Well then. Let’s get rolling in the mud.

Backing up MediaWiki

I’d never done this before and was a bit afraid of messing up things badly. But we got nothing to lose here. Lucky for me there is some good documentation for doing this.

1. Backing up the database

This was straightforward enough:

$ ssh commtech-1.commtech.eqiad.wmflabs

$ cd /srv/medawiki-vagrant

$ vagrant ssh

$ cd /vagrant

$ mysqldump -h hostname -u userid -p dbname > backup.sql

The values for hostname, userid, dbname and dbpassword can be found in your LocalSettings.php. Note that since this was a vagrant instance, the values were spread over both /mediawiki-vagrant/LocalSettings.php and /mediawiki-vagrant/mediawiki/LocalSettings.php. Also note that this command works from inside vagrant so don’t forget to ssh in, noob.

2. Backing up the file system

This backs up the file system, including images and config files. It took a while. The tar was ~1.5GB.

$ tar zcvhf fsbackup.tgz /srv/mediawiki-vagrant/mediawiki

If, like me, you have no idea what zcvhf does - read this.

3. Backing up the wiki content

This was easy to do with the dumpBackup.php script in MediaWiki.

$ php maintenance/dumpBackup.php -- full > dump.xml

Exporting files to local system

If you were paying attention, you would have deduced by now that the instance I was backing up lived on a remote server. I used sftp to move the files from the remote server to my local system. I was shocked to see how easy this was! I’d tried messing with ftp a long time back and I couldn’t get it to work then. Since then I had a notion of how complex it is but I was so wrong!

This was all it took -

$ sftp commtech-1.commtech.eqiad.wmflabs

Connected to commtech-1.commtech.eqiad.wmflabs.

sftp> pwd

Remote working directory: /home/niharika29

sftp> get backup.sql

Fetching /srv/mediawiki-vagrant/backup.sql to backup.sql

/srv/mediawiki-vagrant/backup.sql 100% 12MB 1.6MB/s 00:07

sftp> get fsbackupmw.tgz

Fetching /srv/mediawiki-vagrant/fsbackupmw.tgz to fsbackupmw.tgz

/srv/mediawiki-vagrant/fsbackupmw.tgz 100% 1454MB 1.5MB/s 16:45

sftp> get dump.xml

Fetching /srv/mediawiki-vagrant/mediawiki/dump.xml to dump.xml

/srv/mediawiki-vagrant/mediawiki/dump.xml 100% 196 0.8KB/s 00:00

stp> bye

Settings up a fresh instance

This is something I have done a handful of times now and it’s gotten easier though it seems like a complex thing on the surface. There’s ample good documentation.

TL;DR steps:

- Setup a new instance on Horizon in the project you want it to be a part of.

- Add

role::labs::mediawiki_vagrantto the instance from the Puppet tab. - SSH in to the instance

ssh commtech-2.commtech.wmflabs.org - and run puppet:

sudo puppet agent --test --verbose - Log out and in

cd /srv/mediawiki-vagrant; vagrant up; vagrant provision- Verify it works:

curl http://localhost:8080/wiki/Main_Page - Set up a proxy for your instance on Horizon (DNS menu). Pick port 8080.

- https://commtech2.wmflabs.org - Tada!

Importing backup files to new instance

Reverse sftp steps:

$ sftp commtech-2.commtech.eqiad.wmflabs

Connected to commtech-2.commtech.eqiad.wmflabs.

sftp> put fsbackupmw.tgz

Uploading fsbackupmw.tgz to /home/niharika29/fsbackupmw.tgz

fsbackupmw.tgz 100% 1454MB 633.9KB/s 39:09

sftp> put backup.sql

Uploading backup.sql to /home/niharika29/backup.sql

backup.sql 100% 0 0.0KB/s 00:00

sftp> put dump.xml

Uploading dump.xml to /home/niharika29/dump.xml

dump.xml 100% 196 1.4KB/s 00:00

sftp> bye

Restoring MediaWiki

Again, this sounds pretty tricky. But where there’s documentation, there’s a way.

1. Restoring the database

My new mw-vagrant instance came with the wiki database so I had to drop that first, but for good measure I backed it up just in case things go awry:

$ ssh commtech-2.commtech.eqiad.wmflabs

$ cd /srv/mediawiki-vagrant/

$ vagrant ssh

/

.,. /

,mmmmn ___ ___ _ _ _ _ _ _ _

,._ .,###"nI) | \/ | | |(_) | | | |(_)| | (_)

# ########### | . . | ___ __| | _ __ _ | | | | _ | | __ _

# ############"" | |\/| | / _ \ / _` || | / _` || |/\| || || |/ /| |

" #### # ./ | | | || __/| (_| || || (_| |\ /\ /| || < | |

#'(# # " \_| |_/ \___| \__,_||_| \__,_| \/ \/ |_||_|\_\|_|

# ") #

#, '" '"

$ mysqldump -h '127.0.01' -u 'wikiadmin' -p --default-character-set=binary wiki > backupnew.sql

Enter password:

$ cd /vagrant/

$ mysqladmin -u wikiadmin -p drop wiki

Enter password:

Dropping the database is potentially a very bad thing to do.

Any data stored in the database will be destroyed.

Do you really want to drop the 'wiki' database [y/N] y

Database "wiki" dropped

$ mysqladmin -u wikiadmin -p create wiki

Enter password:

$ mysql -u wikiadmin -p wiki < backupold.sql

Enter password:

Okay, that seems to have worked:

Let’s move on to step 2.

2. Restoring content from dump

This seemed easy. Docs: Link.

And….

$ php mediawiki/maintenance/importDump.php < dump.xml

PHP Warning: XMLReader::read(): uploadsource://49d8375e96a198ed0d05abb86da047d2:1: parser error : Document is empty in /vagrant/mediawiki/includes/import/WikiImporter.php on line 572

PHP Stack trace:

PHP 1. {main}() /vagrant/mediawiki/maintenance/importDump.php:0

PHP 2. require_once() /vagrant/mediawiki/maintenance/importDump.php:350

PHP 3. BackupReader->execute() /vagrant/mediawiki/maintenance/doMaintenance.php:94

PHP 4. BackupReader->importFromStdin() /vagrant/mediawiki/maintenance/importDump.php:116

PHP 5. BackupReader->importFromHandle() /vagrant/mediawiki/maintenance/importDump.php:287

PHP 6. WikiImporter->doImport() /vagrant/mediawiki/maintenance/importDump.php:345

PHP 7. XMLReader->read() /vagrant/mediawiki/includes/import/WikiImporter.php:572

Warning: XMLReader::read(): uploadsource://49d8375e96a198ed0d05abb86da047d2:1: parser error : Document is empty in /vagrant/mediawiki/includes/import/WikiImporter.php on line 572

Call Stack:

0.0011 409040 1. {main}() /vagrant/mediawiki/maintenance/importDump.php:0

0.0053 685024 2. require_once('/vagrant/mediawiki/maintenance/doMaintenance.php') /vagrant/mediawiki/maintenance/importDump.php:350

0.1899 9788992 3. BackupReader->execute() /vagrant/mediawiki/maintenance/doMaintenance.php:94

0.2157 11012768 4. BackupReader->importFromStdin() /vagrant/mediawiki/maintenance/importDump.php:116

0.2157 11013256 5. BackupReader->importFromHandle() /vagrant/mediawiki/maintenance/importDump.php:287

0.2249 11315864 6. WikiImporter->doImport() /vagrant/mediawiki/maintenance/importDump.php:345

0.2249 11315864 7. XMLReader->read() /vagrant/mediawiki/includes/import/WikiImporter.php:572

PHP Warning: XMLReader::read(): Error: You might be using an older HHVM version. in /vagrant/mediawiki/includes/import/WikiImporter.php on line 572

PHP Stack trace:

PHP 1. {main}() /vagrant/mediawiki/maintenance/importDump.php:0

PHP 2. require_once() /vagrant/mediawiki/maintenance/importDump.php:350

PHP 3. BackupReader->execute() /vagrant/mediawiki/maintenance/doMaintenance.php:94

PHP 4. BackupReader->importFromStdin() /vagrant/mediawiki/maintenance/importDump.php:116

PHP 5. BackupReader->importFromHandle() /vagrant/mediawiki/maintenance/importDump.php:287

PHP 6. WikiImporter->doImport() /vagrant/mediawiki/maintenance/importDump.php:345

PHP 7. XMLReader->read() /vagrant/mediawiki/includes/import/WikiImporter.php:572

[dfed825ae694612f3d132ec8] [no req] MWException from line 576 of /vagrant/mediawiki/includes/import/WikiImporter.php: Expected <mediawiki> tag, got

Backtrace:

#0 /vagrant/mediawiki/maintenance/importDump.php(345): WikiImporter->doImport()

#1 /vagrant/mediawiki/maintenance/importDump.php(287): BackupReader->importFromHandle(resource)

#2 /vagrant/mediawiki/maintenance/importDump.php(116): BackupReader->importFromStdin()

#3 /vagrant/mediawiki/maintenance/doMaintenance.php(94): BackupReader->execute()

#4 /vagrant/mediawiki/maintenance/importDump.php(350): require_once(string)

#5 {main}

Hmm, my guess is that some content wasn’t imported because it depended on the HHVM version. I did see some pages so it hasn’t entirely failed.

¯\_(ツ)_/¯

Let’s move on.

3. Restoring the file system

This is probably the biggest hurdle.

$ tar -xvzf fsbackupmw.tgz

That spit out the list of files it unpacked. I had to do some mv to get it in the right spot. Okay.

There were some dependency errors.

$ composer install; composer update

That fixed those.

I can login with my credentials. It all works, yay!

But I still see….

Uh oh. Bizarrely, I couldn’t find that file on neither the old instance, nor the new one. After spending a bit of time on this, I decided to just upload another version and that worked!

I leave you with a philosophical conundrum. The instance has the same content and data and web-proxy as before but a new underlying infrastructure. Can we say that it’s the same instance?